Synthetic Fraud

We often get asked how we can use GenAI to get better at KYC and fraud detection. I think it is the wrong question. Not that there are not some interesting ways to use AI for companies on the fraud side. But rather, we should all be focused on just how good a tool GenAI is for fraudsters, and what that means for how companies approach KYC + fraud detection today.

One type of fraud GenAI will help is synthetic fraud—where bad actors create fake identities, establish a digital identity for those fake identities, and then use them to create accounts. Deloitte recently estimated that synthetic fraud will generate $23B in fraud losses by the end of the decade. Fraudsters build synthetic identities by layering real and fake information. They often start with a small piece of genuine information, such as a Social Security number, and layer on fictitious details like names, addresses, and phone numbers. This complex web of data makes it challenging to trace the fraudulent activity back to its source. Fraudsters may also let these synthetic identities then remain dormant for years before utilizing them to avoid flags for nascent identities in the bureaus.

Creating a credible credit history is often the next step for fraudsters. They strategically open small lines of credit or obtain secured credit cards, making timely payments to establish a positive credit history. Over time, this builds the synthetic identity's creditworthiness, enabling larger-scale fraud. That Deloitte report found over half of synthetic identities had a credit score above 650. The end prize is valuable: the typical payout is between $81,000 and $98,000; while, there are instances of a singular attack yielding several million dollars. And yet, our defenses do not work. LexisNexis estimates 85% of synthetic identities for emerging consumers get past typical checks.

GenAI Creates Golden Age for Fraud

GenAI makes it easier for fraudsters to automate the creation of a large amount of credible synthetic identities. Longer term, it will help them impersonate real people by faking their ID, selfie, and even voice. Recently, a Twitter thread went viral detailing how someone used Stable diffusion to create not just a fake document, but also a fake human who matched the ID photo holding it. GenAI's robust document analysis capabilities empowers fraudsters to develop more sophisticated forgery techniques. By understanding how GenAI scrutinizes documents, criminals can craft counterfeit materials that mimic the complexities detected by the system, potentially compromising the efficacy of document verification processes.

And it is not just stale documents. A recent report from the security firm Sensity used deepfakes to copy a target face onto an ID card to be scanned and then copied that same face onto a video stream of a would-be attacker in order to pass vendors’ liveness tests. Sensity employed deepfake technology to replicate a target's facial features onto an identification card for scanning. Subsequently, they duplicated the same facial characteristics onto a video stream featuring a potential attacker, aiming to deceive vendors' liveness tests. Of the 10 solutions they tested, Sensity discovered that nine of them were sensitive to deepfake attacks.

GenAI's advanced liveness detection algorithms, designed to distinguish live individuals from presentation attacks, can inadvertently provide fraudsters with insights into how to adapt and overcome these challenges. Criminals may exploit the intricacies of GenAI's assessment methods, enabling them to create more refined presentation attacks.

The incorporation of behavioral biometrics by GenAI creates opportunities for fraudsters to exploit the dynamic nature of these traits. By mimicking genuine user behaviors, such as typing patterns, mouse movements, or touchscreen gestures, criminals can potentially undermine the reliability of behavioral biometrics as a secure means of identity verification. You could argue the problem extends even a step further. GenAI's heavy reliance on algorithms introduces a risk of manipulation by malicious actors. Understanding the underlying logic and learning patterns of GenAI may enable fraudsters to deceive the system, compromising the integrity of identity verification processes.

Today’s Tools Won’t Work

Companies are simply unequipped for this wave of new tools for fraudsters. In a world with data breaches leaving PII exposed and infinite possibilities of synthetic identities, companies need step-up tools to challenge potentially risky users. And yet, many companies still do not have document step-ups. Of those that do, a surprising number support document uploads (which means one does not need to even spoof liveness). And now with GenAi we see that even those that capture a selfie + image are susceptible to fraud.

Often, companies’ response is to add probabilistic fraud tools, some of which are mentioned above including typing patterns, mouse movements, or touchscreen gestures. Unfortunately, these heuristic tools will become increasingly difficult to implement as GenAI can help fraudsters combat the models. We have long believed in a world with infinite bad actors, the only way to fight fraud is by finding good actors. We do this by limiting one user to a device and centralizing the proverbial haystack in which all companies look for fraudsters.

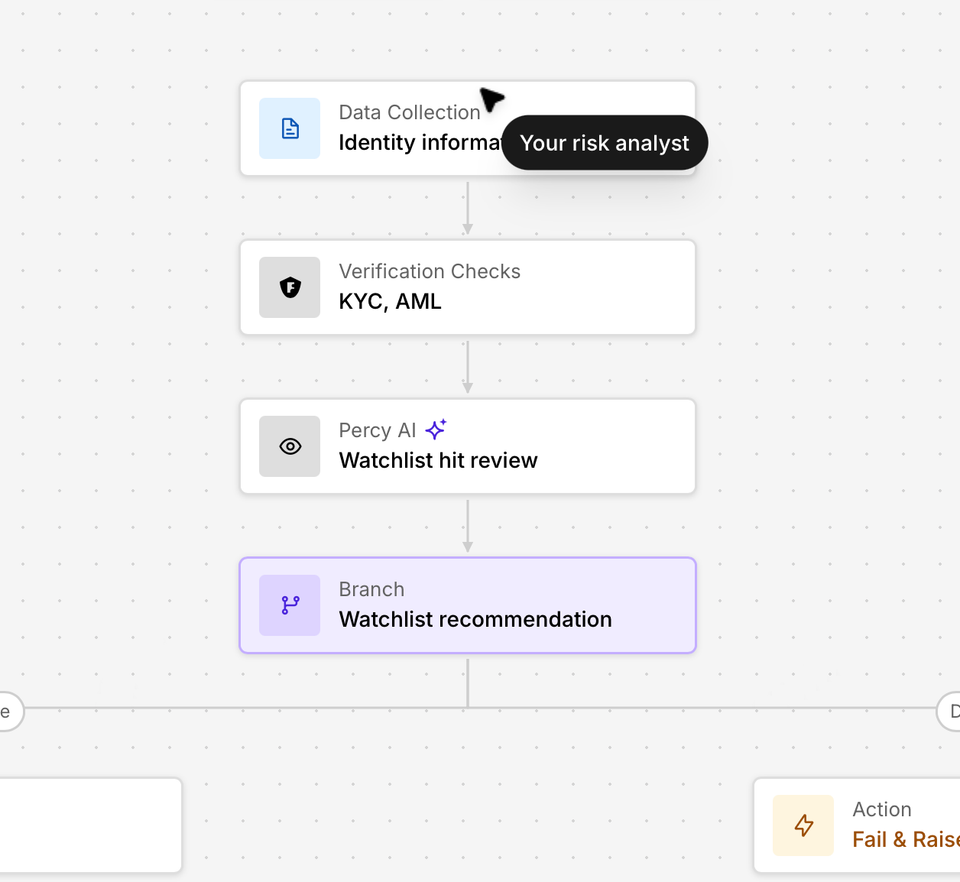

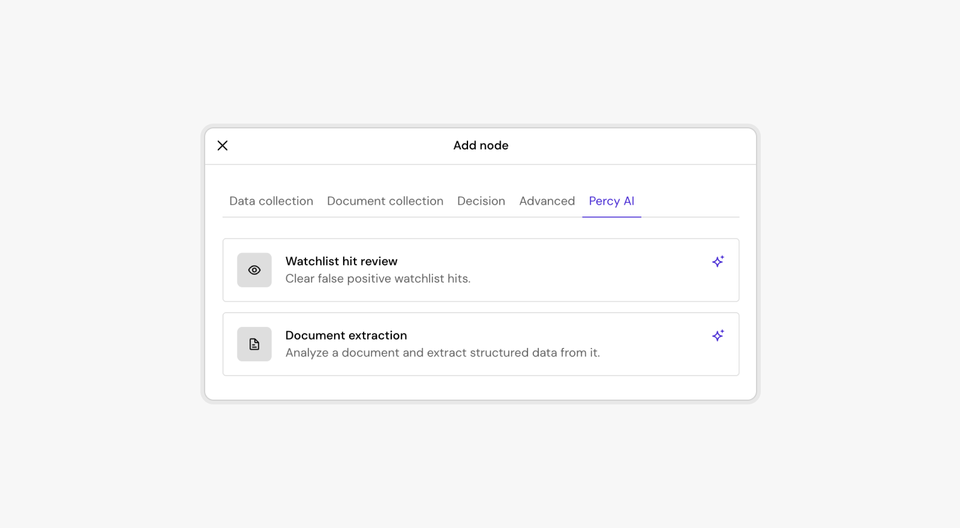

Footprint Enables Companies to Combat GenAI

One of the critical approaches to how Footprint fights generative AI fakes is by once again including the “honest” user into the direct authentication loop. While most companies just serve APIs, Footprint’s end-to-end solution embeds a sophisticated onboarding user experience that leverages native device capabilities and attestation to ensure that real users are capturing real images of their face and documents.

This works first by embedding a state-of-the-art capture experience for end users that leverage the device they already have, their smart phone. Footprint shows a QR code or sends a link to your device, which opens an App Clip (iOS) or Instant App (Android) — which runs native code on the user’s device and can detect whether or not it is jailbroken or rooted, whether or not it is running Footprint cryptographically signed code, and whether or not the device has already been used to verify identities in the past.

When Footprint launches the capture UI — not only does it help take better quality images due to the native device ML/computer vision capabilities (such as fast facial detection, object detection, and PDF417 real-time scanning) — it also authenticates (via cryptographic device attestation) that the capture image is coming from the native device camera in real-time.

This serves to prevent a malicious party from inserting AI generated content. Furthermore, it is able to prove that the user was present — or “live”— at the time of capture. Good actors easily prove that they are authentic and bad actors will either easily get detected or will not provide native-device attested uploads altogether.

Once a good actor verifies their identity, they generate a secure cryptographic credential called a Passkey so that future logins and onboards are frictionless and done in one-click but carry the same verification history as the original onboarding verification.

Above all, the most important thing we do is limit these users who onboard using Footprint to just one identity per device. No matter how powerful GenAI gets, it will not be able to reset this counter. Fancy probabilistic tools are not the answer here: the only way to stop GenAI abetting synthetic fraudsters is by reducing the amount of identities they can have on a device to just one.